Apple has introduced a new artificial intelligence model that can instantly convert a single photograph into a three-dimensional scene, a breakthrough that could reshape the way images are experienced across devices.

The model, known as SHARP (Sharp Monocular View Synthesis in Less Than a Second), was detailed in a research paper published this week and has already been made available as open-source software.

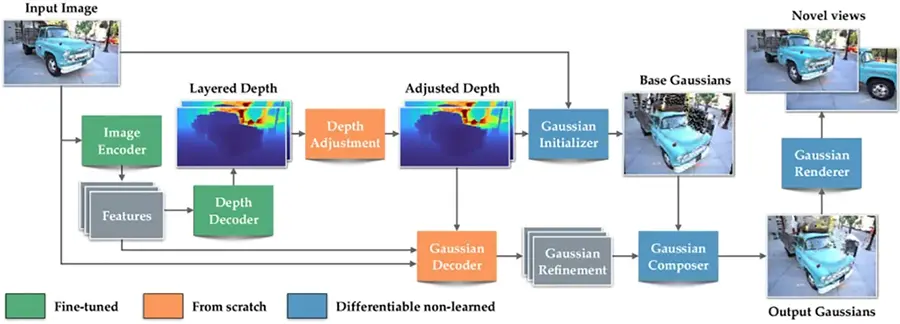

Unlike traditional 3D reconstruction methods, which often require dozens of images taken from multiple angles, SHARP is able to generate a full 3D Gaussian representation from just one photo.

This is achieved through a single feedforward pass of a neural network, allowing the system to produce results in less than a second on a standard GPU.

Apple researchers explained: “Given a single photograph, SHARP regresses the parameters of a 3D Gaussian representation of the depicted scene. This is done in less than a second on a standard GPU via a single feedforward pass through a neural network.”

The model works by predicting depth and geometry patterns learned from extensive training on both synthetic and real-world datasets.

Millions of 3D Gaussian “blobs” of colour and light are positioned in space to recreate the scene, which can then be rendered in real time.

This enables users to view the image from slightly different angles, creating the impression of standing within the scene itself.

Performance benchmarks suggest SHARP sets a new standard in the field. It reduces perceptual error metrics such as LPIPS by up to 34 percent and DISTS by as much as 43 percent compared with previous leading models, while cutting synthesis time by three orders of magnitude.

The trade-off, however, is that SHARP excels at rendering nearby viewpoints but does not generate entirely unseen parts of a scene. This limitation ensures speed and stability but restricts exploration beyond the original vantage point.

The release of SHARP as open-source software is seen as a significant move by Apple, inviting developers and researchers worldwide to experiment with and build upon the technology.

Early adopters have already begun sharing demonstrations online, showcasing how ordinary photos can be transformed into immersive 3D experiences.

Analysts suggest the model could have wide-ranging applications in augmented reality, virtual reality, gaming, and even journalism, where spatial storytelling is gaining traction.

For Apple, SHARP represents another step in its growing emphasis on artificial intelligence and machine learning.

With iOS 26 already introducing “Spatial Scenes” for photos, this research points towards future consumer features that could make immersive content creation accessible to everyday users.

As the technology spreads, SHARP may well become a cornerstone of next-generation digital experiences, bridging the gap between static photography and interactive 3D environments.

Sources: 9to5Mac, AppleInsider